In the ever-evolving landscape of digital security, organizations are increasingly turning to advanced methodologies to stay ahead of cyber threats. Among these, threat hunting has emerged as a proactive approach, moving beyond traditional reactive measures. With the integration of artificial intelligence, this practice is undergoing a transformative shift, enabling security teams to detect and neutralize threats with unprecedented speed and accuracy.

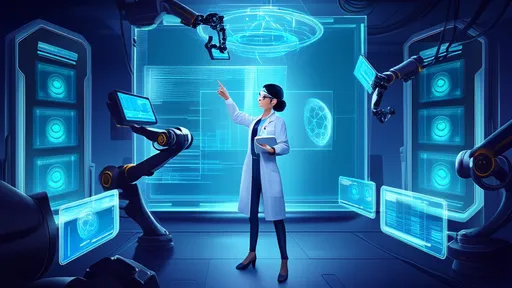

The concept of threat hunting is not new; it has been a part of cybersecurity discussions for years. However, the manual processes that once defined it are no longer sufficient in the face of sophisticated attacks. Artificial intelligence is now augmenting human expertise, allowing hunters to sift through massive datasets, identify subtle anomalies, and predict potential attack vectors before they can be exploited. This synergy between human intuition and machine precision is redefining what it means to be proactive in cybersecurity.

One of the most significant advantages of AI-driven threat hunting is its ability to handle the sheer volume of data generated by modern networks. Traditional methods often rely on predefined rules and signatures, which can miss novel or evolving threats. In contrast, AI algorithms can analyze patterns across diverse sources—logs, endpoints, network traffic—and uncover hidden correlations that might indicate malicious activity. This capability is particularly crucial in identifying advanced persistent threats (APTs), which often operate stealthily over long periods.

Moreover, machine learning models can continuously learn from new data, adapting to emerging tactics and techniques used by adversaries. This dynamic learning process ensures that threat hunters are not relying on outdated intelligence but are instead equipped with insights that reflect the current threat landscape. For instance, AI can flag unusual user behavior or unauthorized access attempts that deviate from established baselines, prompting investigators to delve deeper into potential compromises.

The integration of AI also enhances the efficiency of threat hunting teams. By automating routine tasks such as data collection and initial analysis, AI allows human experts to focus on higher-level decision-making and investigation. This division of labor not only accelerates the hunting process but also reduces the risk of burnout among cybersecurity professionals, who are often overwhelmed by alert fatigue. In essence, AI acts as a force multiplier, empowering hunters to achieve more with limited resources.

However, the adoption of AI in threat hunting is not without challenges. Concerns around data privacy, algorithm bias, and the need for transparency in AI decision-making must be addressed. Organizations must ensure that their AI systems are trained on diverse and representative datasets to avoid skewed results. Additionally, human oversight remains critical; AI should complement, not replace, the nuanced judgment of experienced threat hunters.

Looking ahead, the future of AI-assisted threat hunting appears promising. As natural language processing and predictive analytics advance, we can expect even more sophisticated tools that provide contextual insights and recommend actionable responses. Collaboration between AI systems and human hunters will likely become seamless, with real-time feedback loops enhancing both detection and response capabilities.

In conclusion, the fusion of artificial intelligence and threat hunting represents a paradigm shift in cybersecurity. By leveraging AI's analytical prowess, organizations can move from a defensive posture to one of active engagement with threats. While challenges persist, the potential benefits—faster detection, reduced dwell time, and improved resilience—make this integration a cornerstone of modern security strategies. As cyber threats grow in complexity, so too must our approaches to combating them, and AI-driven threat hunting offers a powerful path forward.

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025