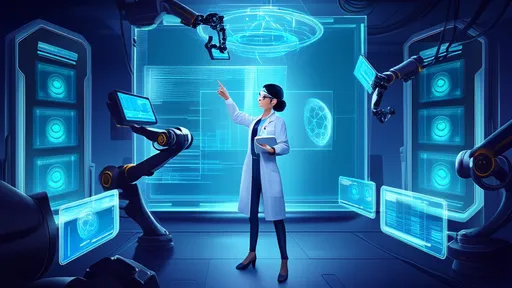

The landscape of 3D asset creation is undergoing a seismic shift, driven by the relentless advancement of generative artificial intelligence. For decades, the process of building the intricate 3D models that populate our video games, films, and virtual simulations has been a domain reserved for highly skilled artists and technical wizards, wielding complex software and investing hundreds, sometimes thousands, of hours into a single asset. This painstaking, manual process is now being fundamentally re-engineered, not by replacing the artist, but by augmenting their capabilities in ways previously confined to science fiction.

At the heart of this transformation is a move from purely manual, polygon-by-polygon sculpting to a new paradigm of AI-assisted ideation and generation. The initial phase of any 3D project—conceptualization—has been dramatically accelerated. Instead of staring at a blank canvas, artists can now engage in a dynamic dialogue with an AI. By feeding a generative model a simple text prompt—"a weathered, moss-covered stone gargoyle perched on a gothic cathedral spire" or "a sleek, cybernetic arm with glowing orange circuits"—creatives can generate a plethora of visual concepts, mood boards, and base meshes in minutes. This is not about the AI dictating the design; it's about sparking inspiration, exploring variations, and overcoming the intimidating inertia of starting from zero. The artist's role evolves from primary drafter to creative director, curating and refining the AI's output.

The technical grunt work of modeling is seeing perhaps the most profound change. Generative AI models, particularly those trained on vast datasets of 3D geometry, can now interpret a 2D concept image or a rough block-out and generate a high-fidelity, topologically sound 3D mesh. This process, often referred to as 3D reconstruction or extrusion, automates what was once a tedious and technically demanding task. For hard-surface objects like vehicles or armor, AI can generate perfectly clean geometry with optimized edge loops. For organic shapes like characters or creatures, it can produce a base mesh with realistic musculature and proportions, which the artist can then sculpt further. This doesn't eliminate the need for expertise in tools like ZBrush or Maya; rather, it allows the artist to focus their skilled labor on the high-value stages of detailing and stylization, rather than the foundational blocking.

Texturing, the process of applying color, surface detail, and material properties to a 3D model, has been similarly revolutionized. AI-powered tools can now analyze a model's geometry and automatically generate plausible, high-resolution texture maps—including albedo, normal, roughness, and displacement maps—based on a text description. An artist can simply describe the desired material, such as "tarnished copper with green patina" or "wet, muddy earth with embedded pebbles," and the AI will synthesize a complex, tileable texture that reacts correctly to virtual light. This capability drastically reduces the time spent searching texture libraries or painting maps manually, ensuring artistic consistency and freeing up creators to focus on the overall look and feel of a scene.

Beyond static assets, generative AI is breathing life into worlds through animation. Procedural animation, while not new, is being supercharged by machine learning. AI systems can now be trained on motion capture data to generate fluid, realistic movements for characters. An animator can provide a starting pose and a desired end goal, and the AI will generate the most biomechanically plausible movement in between. This is especially powerful for crowd simulation, where generating unique, realistic movements for hundreds of background characters was previously computationally and artistically prohibitive. Now, an AI can manage this complexity, creating dynamic, living worlds that feel authentic.

The entire workflow is becoming more iterative and experimental. In the traditional pipeline, making significant changes late in the process—deciding a character's armor should be ornate bronze instead of plain steel, for example—could mean days of backtracking and rework. With generative AI, such a change can be initiated with a altered text prompt. The AI can re-texture the model, adjust the normal maps for new engravings, and even modify the geometry to fit the new aesthetic, all in a fraction of the time. This fosters a more agile and creative environment where artists are empowered to experiment without fear of crippling deadlines, ultimately leading to richer and more detailed final products.

However, this new frontier is not without its challenges and philosophical questions. The issue of training data looms large. Many generative models are trained on vast amounts of publicly available art from the internet, raising significant concerns about copyright, intellectual property, and the uncompensated use of artists' work. The industry is grappling with how to develop ethical AI that learns from licensed or synthetically generated data. Furthermore, there is a valid concern about the potential devaluation of certain technical skills. As AI handles more of the routine tasks, the market may shift to prioritize strong artistic direction, conceptual creativity, and the ability to guide AI systems over proficiency in specific software commands.

Despite these challenges, the overall trajectory is one of empowerment and expansion. Generative AI is not the end of the 3D artist; it is the evolution of the toolkit. It is democratizing aspects of 3D creation, making it accessible to smaller studios and individual creators who previously couldn't afford large art teams. It is taking on the repetitive, time-consuming tasks, allowing human creativity to soar to new heights and focus on what it does best: storytelling, emotion, and crafting unforgettable experiences. The workflow is being transformed from a linear, manual assembly line into a synergistic loop between human intent and machine execution, promising a future where the only limit to the worlds we can build is the breadth of our imagination.

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025