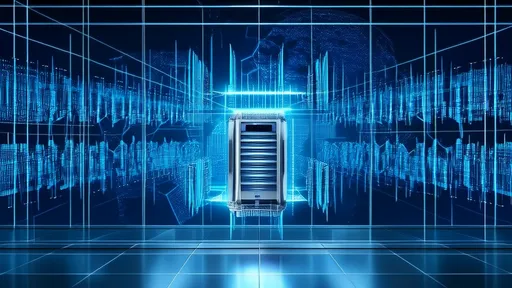

The evolution of cloud computing has ushered in an era of unprecedented flexibility and scalability for enterprises, but it has also introduced a new layer of complexity. As organizations increasingly adopt hybrid and multi-cloud strategies to avoid vendor lock-in, optimize costs, and leverage best-of-breed services, the management of communication between services sprawled across these diverse environments has become a monumental challenge. Enter the service mesh—a dedicated infrastructure layer designed to handle service-to-service communication, security, and observability. However, the true test of its value lies not just in its existence within a single cloud but in its ability to provide a unified, consistent management plane across a fragmented hybrid cloud landscape.

In a typical hybrid cloud setup, applications and their microservices might be running on a combination of on-premises private clouds, public clouds like AWS, Azure, or Google Cloud, and even edge locations. This distribution, while beneficial, creates silos of management. Each environment often comes with its own native networking tools, security policies, and monitoring solutions. Without a unifying layer, DevOps and platform teams find themselves juggling multiple dashboards, writing environment-specific configurations, and struggling to maintain a holistic view of their entire application ecosystem. This is where a service mesh architected for hybrid environments shifts from a nice-to-have to a critical component of the infrastructure.

The core promise of a service mesh in this context is abstraction. It inserts itself as a transparent layer between services, typically through sidecar proxies like Envoy, which are deployed alongside each service instance, regardless of where that instance lives. This sidecar handles all the intricate logic for traffic routing, service discovery, encryption (mTLS), retries, timeouts, and collecting telemetry data. Because this logic is offloaded from the application code itself and into the sidecar, developers are freed to focus on business logic, while operators gain a powerful, centralized point of control. The magic for hybrid clouds is that this sidecar and its control plane can be deployed consistently across every cluster in every environment.

A unified service mesh control plane becomes the single source of truth for the entire distributed system. From this central dashboard, operators can define and enforce security policies—such as strict mutual TLS for all service communications between the on-prem data center and a public cloud VPC—ensuring a consistent security posture everywhere. They can implement sophisticated traffic management rules, like canary deployments where a percentage of user traffic from a service in Azure is gradually shifted to a new version of a dependent service running in AWS, all without the services being aware of the underlying cloud provider. This level of orchestration was previously the stuff of dreams, requiring complex, custom-built tooling.

Furthermore, the observability benefits are profound. A unified service mesh aggregates metrics, logs, and traces from every service across every environment into a single pane of glass. This provides an unparalleled, end-to-end view of application health, performance, and dependencies. SRE teams can now trace a request as it traverses from a user's device through a CDN, into a public cloud, and back to a legacy system in a private data center, identifying latency spikes or failures regardless of where they occur. This holistic observability is crucial for maintaining performance SLAs and quickly diagnosing issues in a complex, geographically dispersed architecture.

However, implementing and operating a service mesh across a hybrid environment is not without its significant challenges. The primary hurdle is often network connectivity. The service mesh control plane needs to be able to communicate with all the sidecar proxies in every cluster, which may be behind different firewalls and in networks with limited bandwidth or high latency between them. Technologies like VPNs, dedicated interconnects, or mesh gateways specifically designed for multi-cluster communication are essential to bridge these network divides and ensure the control plane remains responsive and authoritative.

Another critical consideration is the consistency of the underlying Kubernetes platforms. While most modern service meshes are designed for Kubernetes, different clouds or on-prem setups might be running different versions or even slightly different distributions (e.g., EKS vs. GKE vs. bare-metal K8s). Ensuring that the service mesh components deploy and operate flawlessly across this heterogeneity requires careful planning and testing. The goal is to avoid a scenario where environment-specific quirks negate the very uniformity the mesh is supposed to provide.

Looking ahead, the future of service mesh in hybrid cloud management is leaning towards even greater simplification and integration. We are seeing the emergence of "mesh-less" meshes or API-driven approaches that aim to deliver the benefits without the operational overhead of managing a full-blown mesh. Furthermore, deep integration with GitOps workflows is becoming standard, where security and routing policies are declared in git and automatically synchronized and applied across all environments, making the management truly declarative and automated. The service mesh is evolving from being just a networking layer into becoming the intelligent nervous system for the entire hybrid cloud application portfolio, enabling agility, resilience, and security at a global scale.

In conclusion, the strategic implementation of a service mesh is no longer merely an architectural decision for a single cloud-native application; it is a foundational imperative for any enterprise serious about mastering the complexity of a hybrid cloud world. It provides the missing layer of unified control, turning a fragmented collection of clouds and data centers into a cohesive, manageable, and observable distributed computer. As the technology continues to mature and address its integration challenges, it will undoubtedly become as indispensable as the cloud platforms it helps to tame, empowering organizations to fully realize the promise of hybrid cloud without being overwhelmed by its inherent complexity.

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025