The landscape of artificial intelligence is witnessing a subtle yet profound shift as industry leaders and research institutions increasingly turn their attention to the strategic refinement of small language models (SLMs). Unlike their larger counterparts, which dominate headlines with sheer scale, these compact models are being meticulously tailored for specialized domains, promising efficiency, precision, and accessibility previously unattainable in broader AI systems.

In recent months, advancements in training methodologies have enabled developers to inject deep domain-specific knowledge into SLMs without the exorbitant computational costs associated with massive neural networks. By leveraging techniques such as targeted data curation, transfer learning from general-purpose foundations, and iterative fine-tuning, researchers are creating models that excel in narrow but critical fields like legal analysis, medical diagnostics, or financial forecasting. This approach not only reduces latency and resource demands but also enhances the model's reliability in high-stakes environments where accuracy is paramount.

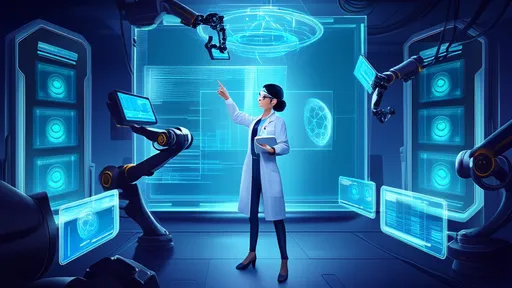

One of the most compelling aspects of this trend is its democratizing effect on AI adoption. Smaller organizations, previously priced out of the AI race due to the infrastructure required for large models, can now deploy sophisticated language tools tailored to their unique needs. For instance, a regional healthcare provider might implement an SLM fine-tuned on medical literature and patient records to assist with preliminary diagnoses, while a legal firm could utilize a model specialized in contract law to streamline document review. These applications highlight how vertical specialization is making AI more practical and impactful at a grassroots level.

However, the path to effective domain-specific training is fraught with challenges. Curating high-quality, representative datasets remains a significant hurdle, particularly in fields where data is scarce or sensitive. Moreover, avoiding overfitting—where the model becomes too narrowly focused and loses generalizability—requires careful balancing during the training process. Researchers are addressing these issues through innovative approaches like synthetic data generation, cross-domain validation, and hybrid architectures that blend specialized knowledge with broader contextual understanding.

Ethical considerations also come to the forefront as SLMs penetrate specialized sectors. In domains like healthcare or finance, biased training data could lead to flawed recommendations with serious real-world consequences. Ensuring transparency, fairness, and accountability in these models is not just a technical necessity but a moral imperative. Developers are increasingly adopting frameworks for ethical AI, incorporating bias detection mechanisms, and engaging domain experts throughout the training lifecycle to mitigate these risks.

The future of SLMs in vertical domains looks exceptionally promising. As techniques for efficient training continue to evolve, we can expect these models to become even more adept at understanding nuanced domain-specific language, cultural contexts, and even unspoken assumptions within specialized fields. This will likely spur innovation in areas such as personalized education, where SLMs could tutor students in complex subjects, or in creative industries, where they might assist in designing products or generating artistic content tailored to specific aesthetic traditions.

Ultimately, the move toward vertically fine-tuned small language models represents a maturation of AI technology—one that prioritizes depth over breadth, quality over quantity, and practicality over spectacle. By focusing on the unique demands of specialized domains, developers are not only expanding the reach of artificial intelligence but also forging tools that integrate more seamlessly and meaningfully into the fabric of professional and everyday life.

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025